FROM DATA TO ASSETS.

Big Data with Lobster_data.

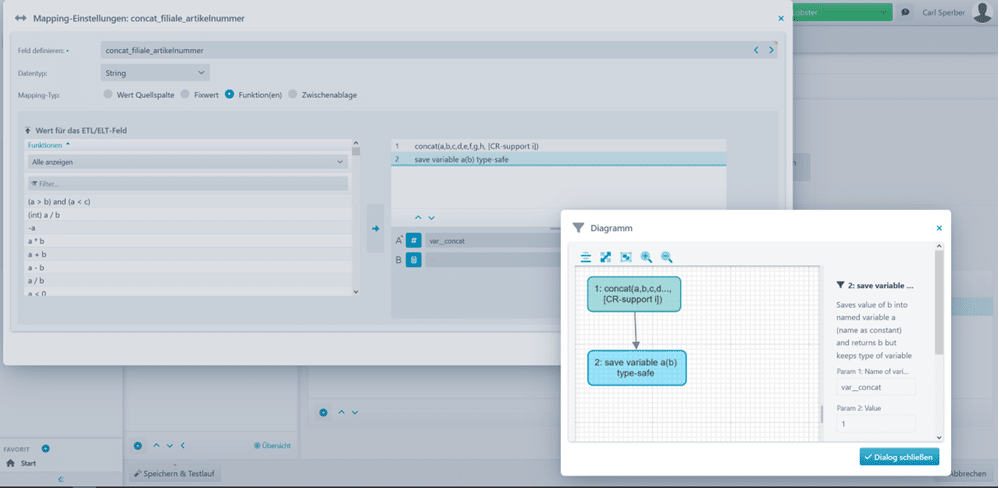

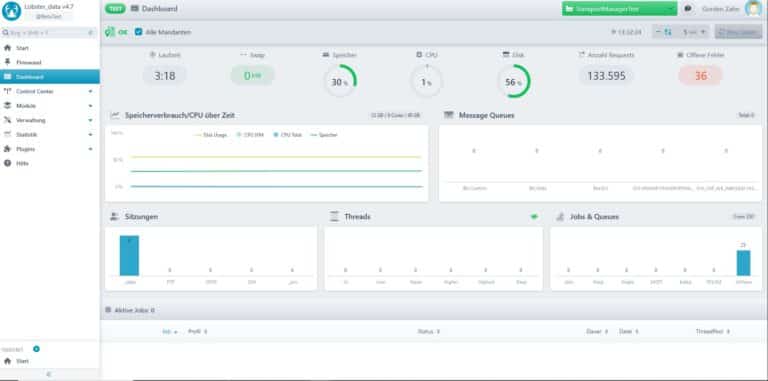

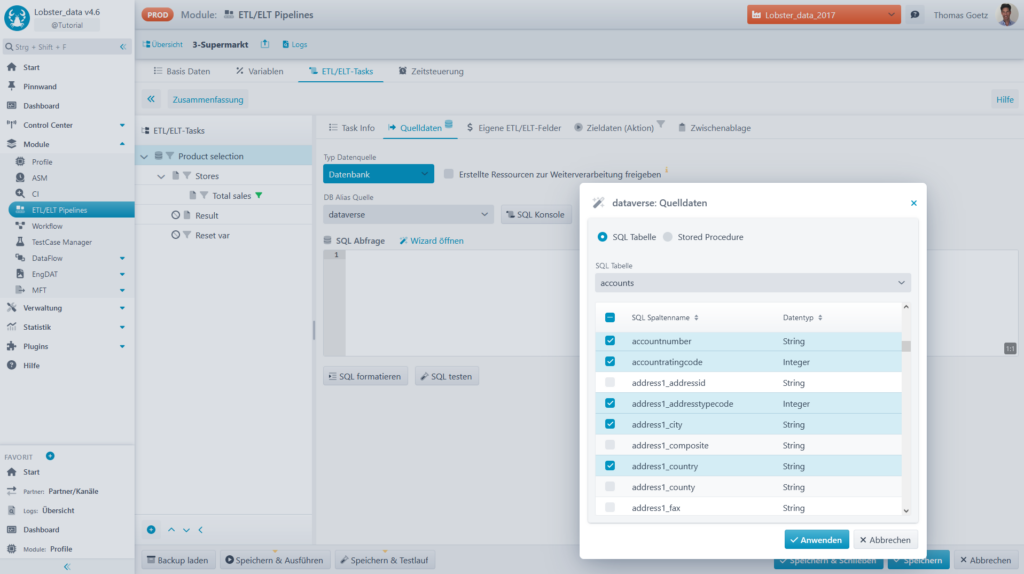

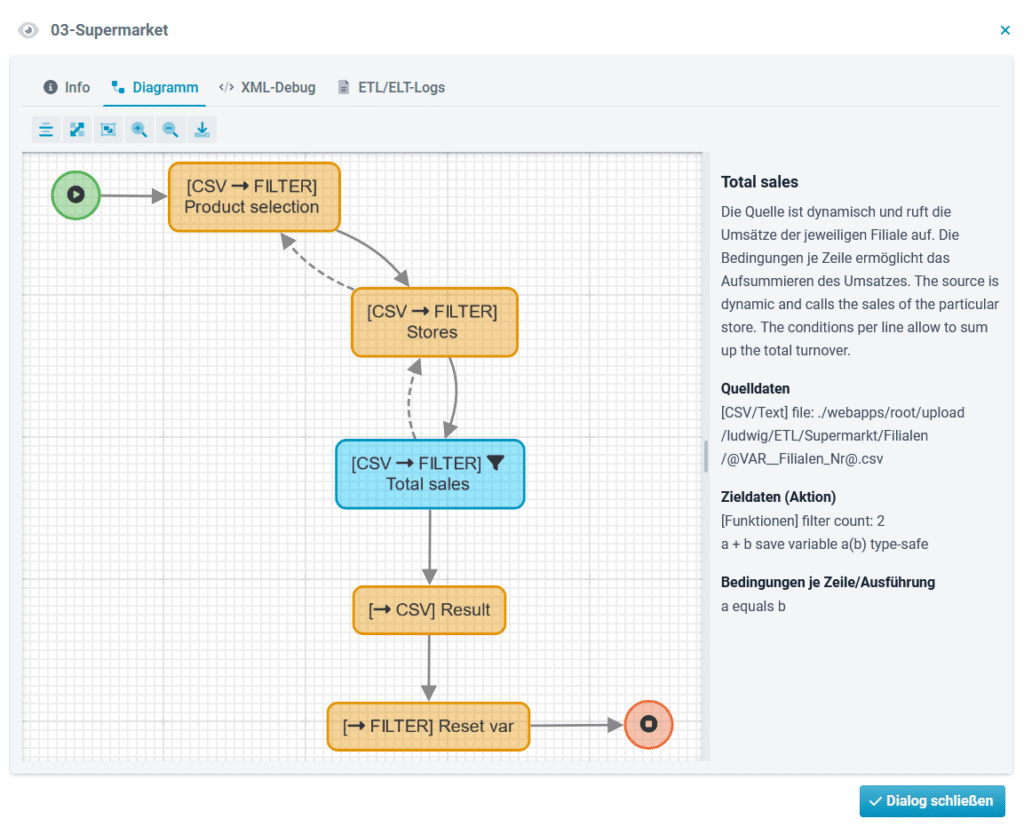

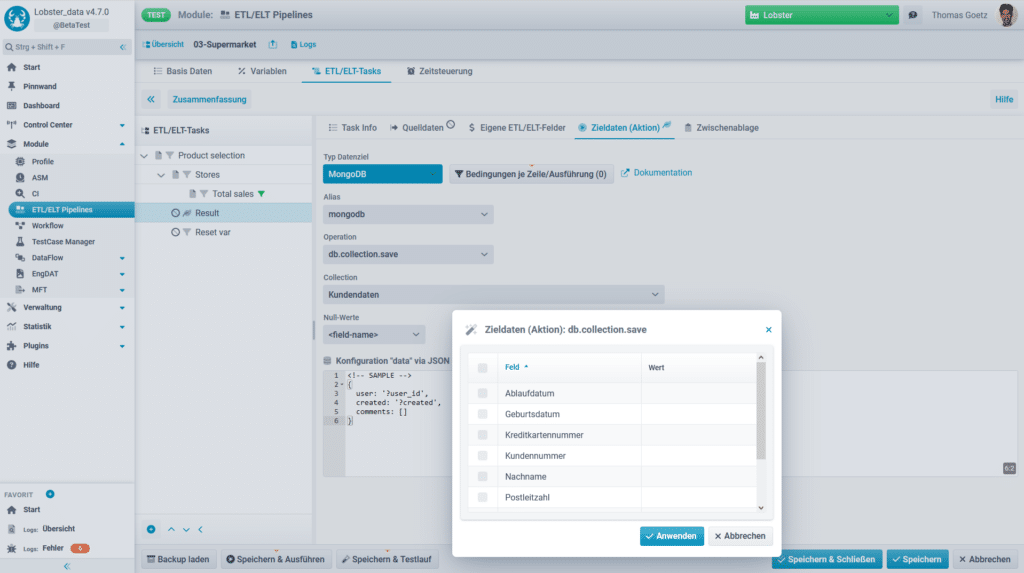

The optional ETL/ELT module in Lobster_data not only extracts data from source systems, but also loads it into target systems. Transformation of unstructured data in the backend at top speed. All on one platform. Data mining for your downstream business intelligence to optimally support and streamline all processes of your business model thanks to machine learning.

CUSTOMERS WHO RELY ON LOBSTER.

Refining mass data. In 5 steps.

ETL or ELT is nothing new, but is becoming increasingly important again due to high-performance system architectures such as Hadoop. Both approaches involve the processing of raw data into business data – by means of extraction, transformation and loading. The difference lies in the timing of the transformation. With ETL, the data is formatted before it is loaded into the target system, the data warehouse (DHW). With ELT, data is formatted after it has been loaded into the data lake. With ETL, cleansed data is loaded, with ELT raw data. Which is better depends on the objective. The ETL/ELT module in Lobster_data can do both.

- Sourcing

- Extraction

- Transformation

- Loading

- Dashboards & Reporting

Many use cases.

For more utility.

The extraction of the data is roughly the same for ETL and ELT. The point in time of the transformation makes the difference. Depending on the use case, you should therefore choose either ETL or ELT.

With ETL, the transformation takes place in the second step, so that enriched data is already available after loading into the data warehouse. Ready-to-use, but limited to the original purpose.

With ELT, on the other hand, raw data or slightly pre-filtered data are transferred directly to the target system. As a result, they are available very quickly, historically complete and in larger quantities. On the other hand, the information is not immediately usable because it must first be processed for analysis.

- Data management

- Business intelligence

- Data driven decision making

- Machine learning & AI

- IoT & Industry 4.0

- Central storage of raw data from different functional areas

- Accessibility of data via data warehouses

- Easy access to relevant data condensates through data catalogues

- Creation of a secure information base for the entire company

- Democratised access to high-quality data

- Improvement of the basis for decision-making, especially for cross-departmental processes

- Reliable analysis of the business situation

- Large amounts of data in a formatted, uniform, consistent and trustworthy form to produce reports

- Identify previously unrecognised trends and patterns in the business

- Respond more quickly to market and internal changes

- Data-based optimisation of business processes

- Increased efficiency in all business areas through data-based decisions

- Automation of decision-making processes through high-performance and reliable data analyses

- Relief for employees and more freedom for core tasks

- Use of predictive and prescriptive analytics to forecast developments and derive appropriate action plans

- Expansion of corporate resilience

- Use of consolidated and secured mass data as input

- Systematic examination of data for patterns and correlations to generate predictive algorithms (e.g. analysis of customer behaviour to predict purchasing decisions)

- Use of deep learning with large and labelled data sets

- Applications such as automatic text, image or speech recognition

- Big Data generation through IoT devices

- Analytical processing of Big Data

- Monitoring of IoT devices through sensor data

- Data processing to increase efficiency within the framework of Industry 4.0

- Predictive maintenance through AI-based prediction of maintenance needs

- Development of new digital IoT business models

- Analysis of data from various sources for the development of customised products and services

Many benefits. For more utility.

- No-Code Technology

- Integration of back- und frontend

- Central platform

- Scalability

- Performance

- Configuring instead of programming

- Preconfigured modules and user-friendly graphical interface

- No-code as an answer to the skilled labour shortage in IT and analytics: specialist departments digitise their processes on their own initiative

- Step-by-step digital transformation through snowball system in the company (employees inspire employees)

- Create individual dashboards

- Acceptance and deeper understanding of Big Data through clear frontend interfaces

- No restrictive, prefabricated standard schemas

- Individually customisable reporting tools

- Accessible and usable by everyone in the company

- Coverage of almost all use cases with only one software

- Reduced integration effort

- Seamless collaboration between different teams

- Fast connection of even complex software products through ready-made connectors

- Industry-agnostic data integration and process automation

- Step-by-step entry into big data processing

- Sustainable and organic development of digital competence in the company

- System that grows with the company

- Scalable performance at predictable costs

- High Performance for Big Data Processing

- Resource-efficient execution of complex data cleansing and transformation rules

- Minimisation of latency and fast availability of source data in the target system

- Ability to offload load to external capacities

- Use of remote servers such as Lobster Bee (Docker container image) to free up central computing capacities

It's all about the people.

Lobster develops and distributes no-code software solutions for digital progress. That question convention and rethink digital transformation. And focus on people without losing sight of the bottom line. Don’t just take our word for it. Our figures speak for themselves.

FOR IMPLEMENTATION

WITH SHORT PAYBACK TIME

WITH ONE TOOL